EdTech Product Expectations in 2026

EdTech buyers in 2026 are more demanding than ever. Teachers expect tools that save time, not add clicks; schools expect clean integrations with their existing platforms; and learners expect personalization that feels helpful, not gimmicky. This article explains what “good enough” looks like now: AI that supports real classroom workflows, interoperability expectations, accessibility and privacy basics, proof of learning impact, and pricing that fits procurement reality. You’ll also get a practical way to define an MVP without overbuilding.

TL;DR: In 2026, EdTech isn’t judged by “cool features” — it’s judged by time saved for educators, measurable learning outcomes, and how well your product fits into existing systems. If your MVP doesn’t integrate cleanly, meet accessibility expectations, and earn trust around data, you’ll struggle to get past pilots.

Expectation #1: “Save teacher time” is the real killer feature

In most EdTech categories, your buyer is not the student—it’s the adult who protects their time and risk: teachers, admins, and IT.

What they expect in 2026:

- A workflow that reduces repetitive work (grading, feedback, planning, progress notes).

- Fast setup and minimal training.

- Fewer clicks than the current workaround (Google Docs, spreadsheets, LMS native tools).

If your product adds steps — even if it’s “better learning”— adoption will stall. The bar is: Does this make a teacher’s week easier by Friday?

A useful framing for scope: define one “high-frequency” job (e.g., weekly quiz creation, rubric feedback, lesson differentiation) and ship the smallest version that makes that job meaningfully faster.

If you’re unsure how to cut scope without cutting value, start with How to Prioritize Features When You’re Bootstrapping Your Startup.

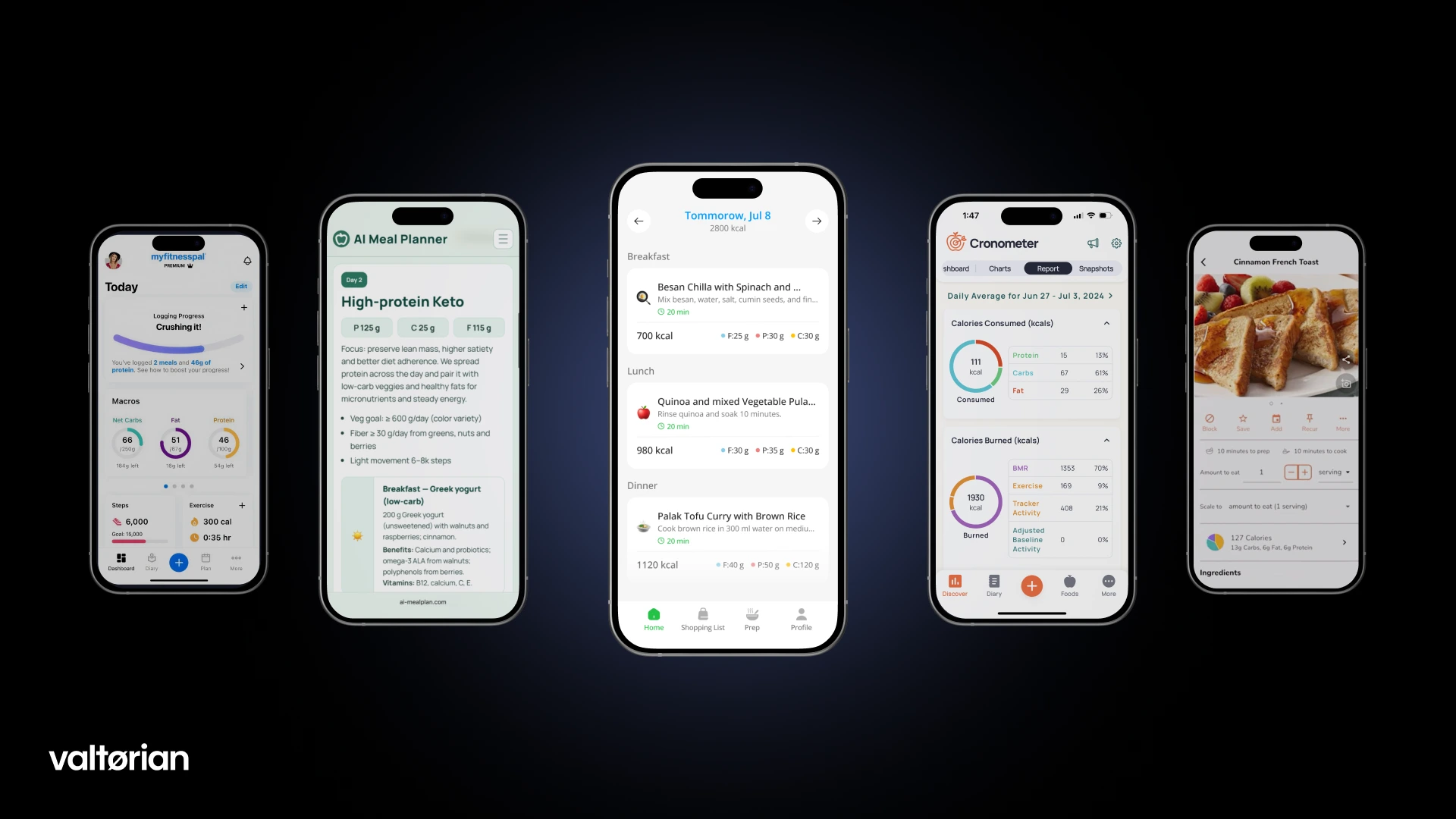

Expectation #2: AI is assumed — what matters is control and reliability

By 2026, “AI-powered” is table stakes in EdTech. The expectation is not magic — it’s assistive intelligence that stays inside boundaries.

Buyers expect:

- Clear controls (teacher can approve/edit before anything is shared with students).

- Predictable behavior and easy undo.

- Transparent limitations (no pretending the system “knows” more than it does).

A practical MVP move: use AI where it’s safest and most valuable early:

- Drafting lesson materials

- Generating practice questions

- Summarizing student work for teacher review

- Tagging and organizing content

The moment AI starts deciding instead of assisting, your trust burden skyrockets.

You’ll also feel a 2026 shift toward more adaptive learning experiences, not just static “personalization.”

For the founder-level perspective on what AI should (and shouldn’t) do in early MVPs, see AI-Powered MVP Development: Save Time and Budget Without Cutting Quality.

Expectation #3: Integrations aren’t “nice-to-have”— they’re the entry ticket

Schools and universities are already running an ecosystem: LMS, SIS, identity, rostering, content libraries, analytics, sometimes proctoring.

In 2026, buyers expect your product to fit into that stack instead of replacing it.

Two interoperability expectations show up again and again:

- LTI (Learning Tools Interoperability) for integrating tools into an LMS in a standard way. LTI 1.3 is widely positioned as the modern, more secure approach.

- OneRoster for exchanging roster and related data between systems (including CSV and REST-based exchange).

You don’t need to build “every integration” in v1. But you do need a believable path:

- SSO / basic identity flow

- Roster import (even if initially via CSV)

- LMS launch experience that doesn’t feel hacked together

If you ignore integrations, your product becomes “another login” teachers won’t use.

If you want a simple way to think about architecture choices without going deep technical, read Web App Development for Startups: Architecture Basics for Non-Tech Founders.

Expectation #4: Accessibility is not optional anymore

Education products are expected to work for all learners—across devices, contexts, and abilities.

In practice, teams often treat accessibility as “later.” In 2026, that’s risky—especially when selling into institutions.

A reasonable baseline to design against is WCAG, with WCAG 2.2 being the current W3C recommendation.

(Exact legal requirements vary by country/sector—treat this as product best practice, not legal advice.)

Founders should assume buyers will notice basics like:

- Keyboard navigation that actually works

- Visible focus states

- Captions/transcripts where relevant

- Readable contrast and sizing

Accessibility is one of those things that feels like “polish,” until it becomes a dealbreaker in procurement.

Expectation #5: Proof beats promises — outcomes must be measurable

EdTech buyers in 2026 are skeptical. They’ve seen too many tools with shiny demos and unclear impact.

So expectations shift to:

- Clear success metrics for the pilot (what improves, by how much, in what timeframe)

- Basic reporting that maps to educator/admin questions

- Evidence of engagement and learning progress (even if early and simple)

Your MVP should ship with measurement built in—not because investors love dashboards, but because buyers need justification.

A practical mindset: define 3–5 metrics tied to the workflow you improve:

- Time saved (teacher-facing)

- Completion/participation (student-facing)

- Quality proxy (fewer resubmissions, higher rubric scores, faster mastery)

If you want a founder-friendly view of what to track early, see Your First Product Metrics Dashboard: What Early-Stage Investors Want to See.

Expectation #6: Privacy and data boundaries must be explicit

In EdTech, trust isn’t marketing — it’s product behavior.

Even at MVP stage, buyers expect you to answer:

- What data do you collect, and why?

- Where is it stored?

- Who can access it?

- How is it deleted?

- What happens if a student account is removed?

Depending on who you sell to and where, there may be specific regulations to consider (for example, different rules for K–12 vs higher ed, and US vs EU). Don’t guess—define a conservative data boundary early and get competent legal guidance when you approach real institutional deals.

From a product standpoint, the winning move is usually: collect less, store less, retain less.

Expectation #7: Procurement reality shapes product decisions

This is the part founders often learn too late: institutional EdTech is not a “self-serve SaaS” market by default.

Expectations you’ll face:

- Security review (even if lightweight at first)

- Stakeholder approval (IT + academic lead + admin)

- Longer decision cycles

- Budget timing constraints

This impacts your MVP strategy. Many successful teams start with one of these wedges:

- Teacher-led adoption in a narrow use case (then expand)

- A department pilot (higher ed)

- A district initiative with a clear operational pain

If you’re building B2B EdTech, your MVP isn’t just “features” — it’s also onboarding, permissions, reporting, and a rollout story.

For a broader 2026 lens on what’s changing in software expectations overall, read What Non-Technical Founders Should Know in 2026.

A simple MVP blueprint that matches 2026 expectations

If you want a practical sequence that keeps you out of “overbuild” territory:

- Pick one buyer and one daily/weekly workflow (teacher, admin, or learner—choose one primary).

- Define the measurable outcome (time saved, completion, mastery proxy).

- Build the smallest version that delivers that outcome with minimal data collection.

- Add one integration path that removes the biggest adoption friction (often LMS launch or roster import).

- Instrument the workflow so you can prove impact within the pilot window.

If you want a sharp reminder of what tends to go wrong when scope creeps, Why MVPs Still Fail in 2026 is a good gut check.

Thinking about building an EdTech product in 2026?

At Valtorian, we help founders design and launch modern web and mobile apps — including AI-powered workflows — with a focus on real user behavior, not demo-only prototypes.

Book a call with Diana

Let’s talk about your idea, scope, and fastest path to a usable MVP.

FAQ

What do EdTech buyers care about most in 2026?

Time saved for educators, measurable outcomes, and low-friction adoption inside existing systems.

Do we need AI in an EdTech product now?

Not always, but buyers increasingly expect AI-assisted workflows. If you use AI, prioritize control, reliability, and safe boundaries.

Should we build LMS/SIS integrations in the first release?

Not all of them. But you should have a credible integration path — often starting with LMS launch/SSO and roster import.

How early should we worry about accessibility?

From day one. Accessibility is expensive to “bolt on” later, and institutions often expect WCAG-aligned behavior.

How can an MVP prove learning impact without a huge study?

Start with workflow metrics: time saved, completion rates, engagement, and a simple quality proxy tied to your use case.

What’s the fastest way to get adoption in schools?

Solve one high-frequency teacher/admin pain, make onboarding effortless, and run a tight pilot with clear success criteria.

.webp)

.webp)

.webp)